At CHI2019, HCI@KAIST presents 14 papers, 6 late breaking works and 3 workshops.

One best paper and one honorable mention are included. These works are from 11 labs of 4 different schools and departments at KAIST. We thank our outstanding colleagues and collaborators from industry, research centers and universities around the world.

We will be hosting the KAIST HOSPITALITY NIGHT event on May 7th (Tue).

Join us at HCI@KAIST HOSPITALITY NIGHT!

Paper & Notes

PicMe: Interactive Visual Guidance for Taking Requested Photo Composition

Monday 11:00 -12:20 | Session of On the Streets | Room: Boisdale 1

- Minju Kim, Graduate School of Culture Technology, KAIST

- Jungjin Lee, KAI Inc., Daejeon

PicMe is a mobile application that provides interactive onscreen guidance that helps the user take pictures of a composition that another person requires. Once the requester captures a picture of the desired composition and delivers it to the user (photographer), a 2.5D guidance system, called the virtual frame, guides the user in real-time by showing a three-dimensional composition of the target image (i.e., size and shape). In addition, according to the matching accuracy rate, we provide a small-sized target image in an inset window as feedback and edge visualization for further alignment of the detail elements. We implemented PicMe to work fully in mobile environments. We then conducted a preliminary user study to evaluate the effectiveness of PicMe compared to traditional 2D guidance methods. The results show that PicMe helps users reach their target images more accurately and quickly by giving participants more confidence in their tasks.

Co-Performing Agent: Design for Building User-Agent Partnership in Learning and Adaptive Services

Wednesday 16:00-17:20 | Session of The One with Bots | Room: Boisdale 1

- Da-jung Kim, Department of Industrial Design, KAIST

- Youn-kyung Lim, Department of Industrial Design, KAIST

Intelligent agents have become prevalent in everyday IT products and services. To improve an agent’s knowledge of a user and the quality of personalized service experience, it is important for the agent to cooperate with the user (e.g., asking users to provide their information and feedback). However, few works inform how to support such user-agent co-performance from a human-centered perspective. To fill this gap, we devised Co-Performing Agent, a Wizard-of-Oz-based research probe of an agent that cooperates with a user to learn by helping users to have a partnership mindset. By incorporating the probe, we conducted a two-month exploratory study, aiming to understand how users experience co-performing with their agent over time. Based on the findings, this paper presents the factors that affected users’ co-performing behaviors and discusses design implications for supporting constructive co-performance and building a resilient user–agent partnership over time.

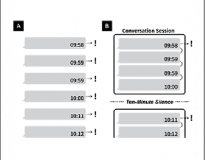

Ten-Minute Silence: A New Notification UX of Mobile Instant Messenger

Wednesday 12:00-12:20 | Session of UX Methods | Room: Forth

- In-geon Shin, Department of Industrial Design, KAIST

- Jin-min Seok, Department of Industrial Design, KAIST

- Youn-kyung Lim, Department of Industrial Design, KAIST

People receive a tremendous number of messages through mobile instant messaging (MIM), which generates crowded notifications. This study highlights our attempt to create a new notification rule to reduce this crowdedness, which can be recognized by both senders and recipients. We developed an MIM app that provides only one notification per conversation session, which is a group of consecutive messages distinguished based on a ten-minute silence period. Through the two-week field study, 20,957 message logs and interview data from 17 participants revealed that MIM notifications affect not only the recipients’ experiences before opening the app but also the entire conversation experience, including that of the senders. The new notification rule created new social norms for the participants’ use of MIM. We report themes about the changes in the MIM experience, which will expand the role of notifications for future MIM apps.

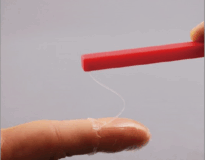

Like A Second Skin: Understanding How Epidermal Devices Affect Human Tactile Perception

Thursday 14:00 - 15:20 | Paper Session: Skin and Textiles | Hall 2

- Aditya Shekhar Nittala, Saarland University

- Klaus Kruttwig, INM-Leibniz Institute

- Jaeyeon Lee, School of Computing, KAIST

- Roland Bennewitz, INM-Leibniz Institute

- Eduard Arzt, INM-Leibniz Institute

- Jürgen Steimle, Saarland University

The emerging class of epidermal devices opens up new opportunities for skin-based sensing, computing, and interaction. Future design of these devices requires an understanding of how skin-worn devices affect the natural tactile perception. In this study, we approach this research challenge by proposing a novel classification system for epidermal devices based on flexural rigidity and by testing advanced adhesive materials, including tattoo paper and thin films of poly (dimethylsiloxane) (PDMS). We report on the results of three psychophysical experiments that investigated the effect of epidermal devices of different rigidity on passive and active tactile perception. We analyzed human tactile sensitivity thresholds, two-point discrimination thresholds, and roughness discrimination abilities on three different body locations (fingertip, hand, forearm). Generally, a correlation was found between device rigidity and tactile sensitivity thresholds as well as roughness discrimination ability. Surprisingly, thin epidermal devices based on PDMS with a hundred times the rigidity of commonly used tattoo paper resulted in comparable levels of tactile acuity. The material offers the benefit of increased robustness against wear and the option to re-use the device. Based on our findings, we derive design recommendations for epidermal devices that combine tactile perception with device robustness.

VirtualComponent: a Mixed-Reality Tool for for Designing and Tuning Breadboarded Circuits

Wednesday 10:00 - 10:20 | Session of Fabricating Electronics | Room: Hall 1

- Yoonji Kim, Department of Industrial Design, KAIST

- Youngkyung Choi, Department of Industrial Design, KAIST

- Hyein Lee, Department of Industrial Design, KAIST

- Geehyuk Lee, School of Computing, KAIST

- Andrea Bianchi, Department of Industrial Design, KAIST

Prototyping electronic circuits is an increasingly popular activity, supported by the work of researchers, who developed toolkits to improve the design, debug and fabrication of electronics. While past work mainly dealt with circuit topology, in this paper we propose a system for determining or tuning the values of the circuit components. Based on the results of a formative study with seventeen makers, we designed VirtualComponent, a mixed-reality tool that allows to digitally place electronic components on a real breadboard, tune their values in software, and see these changes applied to the physical circuit in real-time. VirtualComponent is composed of a set of plug-and-play modules containing banks of components, and a custom breadboard managing the connections and components’ values. Through example usages and the results of an informal study with twelve makers, we demonstrate that VirtualComponent is easy to use and encourages users to test components’ value configurations with little effort.

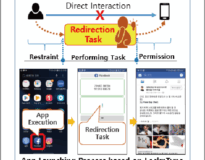

LocknType: Lockout Task Intervention for Discouraging Smartphone App Use

Monday 14:00-15:20 | Paper Session: Human-Smartphone Interaction | Hall 2

- Jaejeung Kim, Graduate School of Knowledge Service Engineering, KAIST

- Joonyoung Park, Graduate School of Knowledge Service Engineering, KAIST

- Hyunsoo Lee, Graduate School of Knowledge Service Engineering, KAIST

- Minsam Ko, College of Computing, Hanyang

- Uichin Lee, Graduate School of Knowledge Service Engineering, KAIST

Instant access and gratification make it difficult for us to self-limit the use of smartphone apps. We hypothesize that a slight increase in the interaction cost of accessing an app could successfully discourage app use. We propose a proactive intervention that requests users to perform a simple lockout task (e.g., typing a fixed length number) whenever a target app is launched. We investigate how a lockout task with varying workloads (i.e., pause only without number input, 10-digit input, and 30-digit input) influence a user’s decision making, by a 3-week, in-situ experiment with 40 participants. Our findings show that even the pause-only task that requires a user to press a button to proceed discouraged an average of 13.1% of app use, and the 30-digit-input task discouraged 47.5%. We derived determinants of app use and non-use decision making for a given lockout task. We further provide implications for persuasive technology design for discouraging undesired behaviors.

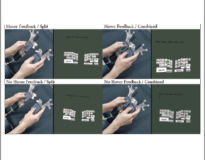

Evaluating the Combination of Visual Communication Cues for HMD-based Mixed Reality Remote Collaboration

Tuesday 09:00 - 10:20 | Session of X Reality Evaluations | Room Dochart 2

- Seungwon Kim, School of ITMS, University of South Australia

- Gun Lee, School of ITMS, University of South Australia

- Weidong Huang, Swinburne University of Technology

- Hayun Kim, Graduate School of Culture Technology, KAIST

- Woontack Woo, Graduate School of Culture Technology, KAIST

- Mark Billinghurst, School of ITMS, University of South Australia

Many researchers have studied various visual communication cues (e.g. pointer, sketching, and hand gesture) in Mixed Reality remote collaboration systems for real-world tasks. However, the effect of combining them has not been so well explored. We studied the effect of these cues in four combinations: hand only, hand + pointer, hand + sketch, and hand + pointer + sketch, with three problem tasks: Lego, Tangram, and Origami. The study results showed that the participants completed the task significantly faster and felt a significantly higher level of usability when the sketch cue is added to the hand gesture cue, but not with adding the pointer cue. Participants also preferred the combinations including hand and sketch cues over the other combinations. However, using additional cues (pointer or sketch) increased the perceived mental effort and did not improve the feeling of co-presence. We discuss the implications of these results and future research directions.

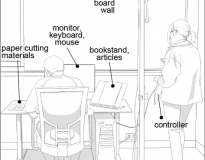

The Effects of Interruption Timing on Autonomous Height-Adjustable Desks that Respond to Task Changes

Tuesday 11:00 - 12:20 | Session of In the Office | Room Alsh 1

- Bokyung Lee, Department of Industrial Design, KAIST

- Sindy Wu, School of computer science and technology, KAIST

- Maria Jose Reyes, Department of Industrial Design, KAIST

- Daniel Saakes, Department of Industrial Design, KAIST

Actuated furniture, such as electric adjustable sit-stand desks, helps users vary their posture and contributes to comfort and health. However, studies have found that users rarely initiate height changes. Therefore, in this paper, we look into furniture that adjusts itself to the user’s needs. A situated interview study indicated task-changing as an opportune moment for automatic height adjustment. We then performed a Wizard of Oz study to find the best timing for changing desk height to minimize interruption and discomfort. The results are in line with prior work on task interruption in graphical user interfaces and show that the table should change height during a task change. However, the results also indicate that until users build trust in the system, they prefer actuation after a task change to experience the impact of the adjustment.

SmartManikin: Virtual Humans with Agency for Design Tools

Tuesday 16:00 - 17:20 | Session of Design Tools | Room Alsh 1

- Bokyung Lee, Department of Industrial Design, KAIST

- Taeil Jin, Graduate school of culture and technology, KAIST

- Sung-Hee Lee, Faculty of art and Design, University of Tsukuba

- Daniel Saakes, Department of Industrial Design, KAIST

When designing comfort and usability in products, designers need to evaluate aspects ranging from anthropometrics to use scenarios. Therefore, virtual and poseable mannequins are employed as a reference in early-stage tools and for evaluation in the later stages. However, tools to intuitively interact with virtual humans are lacking. In this paper, we introduce SmartManikin, a mannequin with agency that responds to high-level commands and to real-time design changes. We first captured human poses with respect to desk configurations, identified key features of the pose and trained regression functions to estimate the optimal features at a given desk setup. The SmartManikin’s pose is generated by the predicted features as well as by using forward and inverse kinematics. We present our design, implementation, and an evaluation with expert designers. The results revealed that SmartManikin enhances the design experience by providing feedback concerning comfort and health in real time.

Slow Robots for Unobtrusive Posture Correction

Tuesday 09:00 - 10:20 | Session of Weighty Interactions | Room Dochart 1

- Joon-Gi Shin, Department of Industrial Design, KAIST

- Eiji Onchi, Graduate school of comprehensive human science, University of Tsukuba

- Maria Jose Reyes, Department of Industrial Design, KAIST

- Junbong Song, TeamVoid

- Uichin Lee, Graduate School of Knowledge Service Engineering, KAIST

- Seung-Hee Lee, Faculty of Art and Design, University of Tsukuba

- Daniel Saakes, Department of Industrial Design, KAIST

Prolonged static and unbalanced sitting postures during computer usage contribute to musculoskeletal discomfort. In this paper, we investigated the use of a very slow moving monitor for unobtrusive posture correction. In a first study, we identified display velocities below the perception threshold and observed how users (without being aware) responded by gradually following the monitor’s motion. From the result, we designed a robotic monitor that moves imperceptible to counterbalance unbalanced sitting postures and induces posture correction. In an evaluation study (n=12), we had participants work for four hours without and with our prototype (8 in total). Results showed that actuation increased the frequency of non-disruptive swift posture corrections and significantly.

How to Design Voice Based Navigation for How-To Videos

Monday 11:00 - 12:20 | Interacting with Videos |

- Minsuk Chang, School of Computing, KAIST

- Anh Troung, Adobe Research, Stanford University

- Oliver Wang, Adobe Research

- Maneesh Agrawala, Stanford University

- Juho Kim, School of Computing, KAIST

When watching how-to videos related to physical tasks, users’ hands are often occupied by the task, making voice input a natural fit. To better understand the design space of voice interactions for how-to video navigation, we conducted three think-aloud studies using: 1) a traditional video interface, 2) a research probe providing a voice controlled video interface, and 3) a wizard-of-oz interface. From the studies, we distill seven navigation objectives and their underlying intents: pace control pause, content alignment pause, video control pause, reference jump, replay jump, skip jump, and peek jump. Our analysis found that users’ navigation objectives and intents affect the choice of referent type and referencing approach in command utterances. Based on our findings, we recommend to 1) support conversational strategies like sequence expansions and command queues, 2) allow users to identify and refine their navigation objectives explicitly, and 3) support the seven interaction intents.

project website

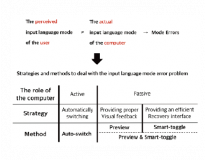

Diagnosing and Coping with Mode Errors in Korean-English Dual-language Keyboard

Monday 14:00 - 15:20 | Paper Session: Human-Smartphone Interaction | Hall 2

- Sangyoon Lee, School of Computing, KAIST

- Jaeyeon Lee, School of Computing, KAIST

- Geehyuk Lee, School of Computing, KAIST

In countries where languages with non-LatIn characters are prevalent, people use a keyboard with two language modes namely, the native language and English, and often experience mode errors. To diagnose the mode error problem, we conducted a field study and observed that 78% of the mode errors occurred immediately after application switchIng. We implemented four methods (Auto-switch, Preview, Smart-toggle, and Preview & Smart-toggle) based on three strategies to deal with the mode error problem and conducted field studies to verify their effectiveness. In the studies considerIng Korean-English dual Input, Auto-switch was Ineffective. On the contrary, Preview significantly reduced the mode errors from 75.1% to 41.3%, and Smart-toggle saved typIng cost for recoverIng from mode errors. In Preview & Smart-toggle, Preview reduced mode errors and Smart-toggle handled 86.2% of the mode errors that slipped past Preview. These results suggest that Preview & Smart-toggle is a promisIng method for preventIng mode errors for the Korean-English dual-Input environment.

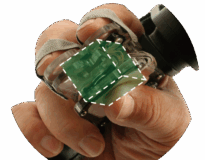

TORC: A Virtual Reality Controller for In-Hand High-Dexterity Finger Interaction

Thursday 11:00 - 12:20 | Paper Session: Unexpected interactions | Clyde Auditorium

- Jaeyeon Lee, School of Computing, KAIST

- Mike Sinclair, Microsoft Research

- Mar Gonzalez-Franco, Microsoft Research

- Eyal Ofek, Microsoft Research

- Christian Holz, Microsoft Research

Recent hand-held controllers have explored a variety of haptic feedback sensations for users in virtual reality by producing both kinesthetic and cutaneous feedback from virtual objects. These controllers are grounded to the user’s hand and can only manipulate objects through arm and wrist motions, not using the dexterity of their fingers as they would in real life. In this paper, we present TORC, a rigid haptic controller that renders virtual object characteristics and behaviors such as texture and compliance. Users hold and squeeze TORC using their thumb and two fingers and interact with virtual objects by sliding their thumb on TORC’s trackpad. During the interaction, vibrotactile motors produce sensations to each finger that represent the haptic feel of squeezing, shearing or turning an object. Our evaluation showed that using TORC, participants could manipulate virtual objects more precisely (e.g., position and rotate objects in 3D) than when using a conventional VR controller.

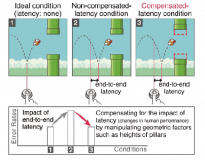

Geometrically Compensating Effect of End-to-End Latency in Moving-Target Selection Games

Wednesday 16:00-17:20 | Session of Gameplay Analysis and Latency | Room Hall2

- Injung Lee, Graduate school of culture and technology, KAIST

- Sunjun Kim, Aalto University

- Byungjoo Lee, Graduate school of culture and technology, KAIST

Effects of unintended latency on gamer performance have been reported. End-to-end latency can be corrected by post- input manipulation of activation times, but this gives the player unnatural gameplay experience. For moving-target selection games such as Flappy Bird, the paper presents a predictive model of latency on error rate and a novel compensation method for the latency effects by adjusting the game’s geometry design – e.g., by modifying the size of the selection region. Without manipulation of the game clock, this can keep the user’s error rate constant even if the end- to-end latency of the system changes. The approach extends the current model of moving-target selection with two additional assumptions about the effects of latency: (1) latency reduces players’ cue-viewing time and (2) pushes the mean of the input distribution backward. The model and method proposed have been validated through precise experiments.

Late Breaking Work

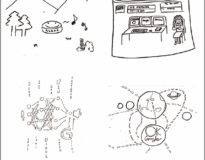

“What does your Agent look like?” A Drawing Study to Understand Users’ Perceived Persona of Conversational Agent

Tuesday - 10:20 - 11:00 | Session of Des | Room: Hall 4

- Sunok Lee, Department of Industrial Design, KAIST

- Sungbae Kim, Department of Industrial Design, KAIST

- Sangsu Lee, Department of Industrial Design, KAIST

Conversational agents (CAs) become more popular and useful at home. Creating the persona is an important part of designing the conversational user interface (CUI). Since the CUI is a voice-mediated interface, users naturally form an image of the CA’s persona through the voice. Because that image affects users’ interaction with CAs while using a CUI, we tried to understand users’ perception via drawing method. We asked 31 users to draw an image of the CA that communicates with the user. Through a qualitative analysis of the collected drawings and interviews, we could see the various types of CA personas perceived by users and found design factors that influenced users’ perception. Our findings help us understand persona perception, and that will provide designers with design implications for creating an appropriate persona.

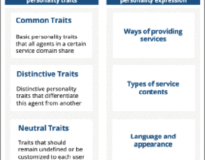

Designing Personalities of Conversational Agents

Tuesday - 10:20 - 11:00 | Session of Des | Room: Hall 4

- Hankyung Kim, Department of Industrial Design, KAIST

- Dong Yoon Koh, Department of Industrial Design, KAIST

- Gaeun Lee, Samsung Research

- Jung-Mi Park, Samsung Research

- Youn-kyung Lim, Department of Industrial Design, KAIST

Recent hand-held controllers have explored a variety of haptic feedback sensations for users in virtual reality by producing both kinesthetic and cutaneous feedback from virtual objects. These controllers are grounded to the user’s hand and can only manipulate objects through arm and wrist motions, not using the dexterity of their fingers as they would in real life. In this paper, we present TORC, a rigid haptic controller that renders virtual object characteristics and behaviors such as texture and compliance. Users hold and squeeze TORC using their thumb and two fingers and interact with virtual objects by sliding their thumb on TORC’s trackpad. During the interaction, vibrotactile motors produce sensations to each finger that represent the haptic feel of squeezing, shearing or turning an object. Our evaluation showed that using TORC, participants could manipulate virtual objects more precisely (e.g., position and rotate objects in 3D) than when using a conventional VR controller.

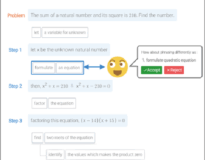

SolveDeep: A System for Supporting Subgoal Learning in Online Math Problem Solving

Tuesday - 10:20 - 11:00 | Session of Des | Room: Hall 4

- Hyoungwook Jin, School of Computing, KAIST

- Minsuk Chang, School of Computing, KAIST

- Juho Kim, School of Computing, KAIST

Learner-driven subgoal labeling helps learners form a hierarchical structure of solutions with subgoals, which are conceptual units of procedural problem solving. While learning with such hierarchical structure of a solution in mind is effective in learning problem solving strategies, the development of an interactive feedback system to support subgoal labeling tasks at scale requires significant expert efforts, making learner-driven subgoal labeling difficult to be applied in online learning environments. We propose SolveDeep, a system that provides feedback on learner solutions with peer-generated subgoals. SolveDeep utilizes a learnersourcing workflow to generate the hierarchical representation of possible solutions, and uses a graph-alignment algorithm to generate a solution graph by merging the populated solution structures, which are then used to generate feedback on future learners’ solutions. We conducted a user study with 7 participants to evaluate the efficacy of our system. Participants did subgoal learning with two math problems and rated the usefulness of system feedback. The average rating was 4.86 out of 7 (1: Not useful, 7: Useful), and the system could successfully construct a hierarchical structure of solutions with learnersourced subgoal labels.

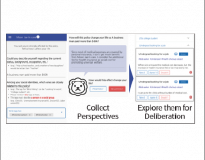

Crowdsourcing Perspectives on Public Policy from Stakeholders

Tuesday - 10:20 - 11:00 | Session of Des | Room: Hall 4

- Hyunwoo Kim, School of Computing, KAIST

- Eun-Young Ko, School of Computing, KAIST

- Donghoon Han, School of Computing, KAIST

- Sung-Chul Lee, School of Computing, KAIST

- Simon T. Perrault, Singapore University of Technology and Design, Singapore

- Jihee Kim, School of Business and Technology Management, KAIST

- Juho Kim, School of Computing, KAIST

Personal deliberation, the process through which people can form an informed opinion on social issues, serves an important role in helping citizens construct a rational argument in the public deliberation. However, existing information channels for public policies deliver only few stakeholders’ voices, thus failing to provide a diverse knowledge base for personal deliberation. This paper presents an initial design of PolicyScape, an online system that supports personal deliberation on public policies by helping citizens explore diverse stakeholders and their perspectives on the policy’s effect. Building on literature on crowdsourced policymaking and policy stakeholders, we present several design choices for crowdsourcing stakeholder perspectives. We introduce perspective-taking as an approach for personal deliberation by helping users consider stakeholder perspectives on policy issues. Our initial results suggest that PolicyScape could collect diverse sets of perspectives from the stakeholders of public policies, and help participants discover unexpected viewpoints of various stakeholder groups.

Improving Two-Thumb Touchpad Typing in Virtual Reality

Wednesday 15:20 - 16:00 | Late-breaking Work: Poster Rotation 2 | Hall 4

- Jeongmin Son, School of Computing, KAIST

- Sunggeun Ahn, School of Computing, KAIST

- Sunbum Kim, School of Computing, KAIST

- Geehyuk Lee, School of Computing, KAIST

Two-Thumb Touchpad Typing (4T) using hand-held controllers is one of the common text entry techniques in Virtual Reality (VR). However, its performance is far below that of Two-Thumb Typing on a smartphone. We explored the possibility of improving its performance focusing on the following two factors: the visual feedback of hovering thumbs and the grip stability of the controllers. We examined the effects of these two factors on the performance of 4T in VR in user experiments. Their results show that hover feedback had a significant main effect on the 4T performance, but grip stability did not. We then investigated the achievable performance of the final 4T design in a longitudinal study, and its results show that users could achieve a Typing speed over 30 words per minute after two hours of practice.

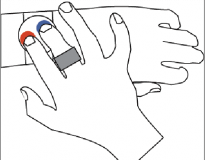

FingMag: Finger Identification Method for Smartwatch

Wednesday 15:20 - 16:00 | Late-breaking Work: Poster Rotation 2 | Hall 4

- Keunwoo Park, School of Computing, KAIST

- Geehyuk Lee, School of Computing, KAIST

Interacting with a smartwatch is difficult owing to its small touchscreen. A general strategy to overcome the limitations of the small screen is to increase the input vocabulary. A popular approach to do this is to distinguish fingers and assign different functions to them. As a finger identification method for a smartwatch, we propose FingMag, a machine-learning-based method that identifies the finger on the screen with the help of a ring. for this identification, the finger’s touch position and the magnetic field from a magnet embedded in the ring are used. In an offline evaluation using data collected from 12 participants, we show that FingMag can identify the finger with an accuracy of 96.21% in stationary geomagnetic conditions.

Workshops & Symposia

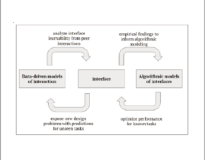

User-Centered Graphical Models of Interaction

Workshop on Computational Modeling in Human-Computer Interaction

- Minsuk Chang, School of Computing, KAIST

- Juho Kim, School of Computing, KAIST

In this position paper, I present a set of data-driven techniques in modeling the learning material, learner workflow and the learning task as graphical representations, with which at scale can createn and support learning opportunities in the wild. I propose the graphical models resulting from this bottom-up approach can further serve as proxies for representing learnability bounds of an interface. I also propose an alternative approach which directly aims to “learn” the interaction bounds by modeling the interface as an agent’s sequential decision-making problem. Then I illustrate how the data-driven modeling techniques and algorithm modeling techniques can create a mutually beneficial bridge for advancing design of interfaces.

Readersourcing an Accurate and Comprehensive Understanding of Health-related Information Represented by Media

Workshop on HCI for Accurate, Impartial and Transparent Journalism: Challenges and Solutions

- Eun-Young Ko, School of Computing, KAIST

- Ching Liu, National Tsing Hua University

- Hyuntak Cha, Seoul National University

- Juho Kim, School of Computing, KAIST

Health news delivers findings from health-related research to the public. As the delivered information may affect the public’s everyday decision or behavior, readers should get an accurate and compre- hensive understanding of the research from articles they read. However, it is rarely achieved due to incomplete information delivered by the news stories and a lack of critical evaluation of readers. In this position paper, we propose a readersourcing approach, an idea of engaging readers in a critical reading activity while collecting valuable artifacts for future readers to acquire a more accurate and comprehensive understanding of health-related information. We discuss challenges, opportunities, and design considerations in the readersourcing approach. Then we present the initial design of a web-based news reading application that connects health news readers via questioning and answering tasks.

PlayMaker: A Participatory Design Method for Creating Entertainment Application Concepts Using Activity Data

Workshop on HCI for Accurate, Impartial and Transparent Journalism: Challenges and Solutions

- Dong Yoon Koh, Department of Industrial Design, KAIST

- Ju Yeon Kim, Department of Industrial Design, KAIST

- Donghyeok Yun, Department of Industrial Design, KAIST

- Youn-kyung Lim, Department of Industrial Design, KAIST

The public’s ever-growing interest in health has led the well-being industry to explosive growth over the years. This propelled activity trackers as one of the trendiest items among the current day wearable devices. Seeking new opportunities for effective data utilization, we present a participatory design method that explores the fusion of activity data with entertainment application. In this method we spur participants to design by mix-and-matching activity tracker data attributes to existing entertainment application features to produce new concepts. We report two cases of method implementation and further discuss the opportunities of activity tracker data as means for entertainment application design.