DATE 11 May – 16 May 2023

We are excited to bring good news! At CHI 2024, KAIST records a total of 34 Full Paper publications, 9 Late-Breaking Works, 3 Interactivities, 2 Workshops, 1 Video Showcase, 1 Special Interest Group Congratulations on the outstanding achievement!

Paper Publications

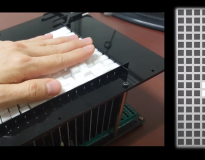

Big or Small, It’s All in Your Head: Visuo-Haptic Illusion of Size-Change Using Finger-Repositioning

CHI'24 Honorable Mention

Myung Jin Kim (KAIST); Eyal Ofek (Microsoft Research); Michel Pahud (Microsoft Research); Mike J Sinclair (University of Washington); Andrea Bianchi (KAIST)

Haptic perception of physical sizes increases the realism and immersion in Virtual Reality (VR). Prior work rendered sizes by exerting pressure on the user’s fingertips or employing tangible, shape-changing devices. These interfaces are constrained by the physical shapes they can assume, making it challenging to simulate objects growing larger or smaller than the perceived size of the interface. Motivated by literature on pseudo-haptics describing the strong influence of visuals over haptic perception, this work investigates modulating the perception of size beyond this range. We developed a fixed-sized VR controller leveraging finger-repositioning to create a visuo-haptic illusion of dynamic size-change of handheld virtual objects. Through two user studies, we found that with an accompanying size-changing visual context, users can perceive virtual object sizes up to 44.2% smaller to 160.4%larger than the perceived size of the device. Without the accompanying visuals, a constant size (141.4% of device size) was perceived.

Comfortable Mobility vs. Attractive Scenery: The Key to Augmenting Narrative Worlds in Outdoor Locative Augmented Reality Storytelling

CHI'24 Honorable Mention

HYERIM PARK (KAIST); Aram Min (Technical Research Institute, Hanmac Engineering); Hyunjin Lee (KAIST); Maryam Shakeri (K.N. Toosi University of Technology); Ikbeom Jeon (KAIST); Woontack Woo (KAIST , KAIST)

We investigate how path context, encompassing both comfort and attractiveness, shapes user experiences in outdoor locative storytelling using Augmented Reality (AR). Addressing a research gap that predominantly concentrates on indoor settings or narrative backdrops, our user-focused research delves into the interplay between perceived path context and locative AR storytelling on routes with diverse walkability levels. We examine the correlation and causation between narrative engagement, spatial presence, perceived workload, and perceived path context. Our findings show that on paths with reasonable path walkability, attractive elements positively influence the narrative experience. However, even in environments with assured narrative walkability, inappropriate safety elements can divert user attention to mobility, hindering the integration of real-world features into the narrative. These results carry significant implications for path creation in outdoor locative AR storytelling, underscoring the importance of ensuring comfort and maintaining a balance between comfort and attractiveness to enrich the outdoor AR storytelling experience.

Demystifying Tacit Knowledge in Graphic Design: Characteristics, Instances, Approaches, and Guidelines

CHI'24 Honorable Mention

Kihoon Son (KAIST); DaEun Choi (KAIST); Tae Soo Kim (KAIST); Juho Kim (KAIST)

Despite the growing demand for professional graphic design knowledge, the tacit nature of design inhibits knowledge sharing. However, there is a limited understanding on the characteristics and instances of tacit knowledge in graphic design. In this work, we build a comprehensive set of tacit knowledge characteristics through a literature review. Through interviews with 10 professional graphic designers, we collected 123 tacit knowledge instances and labeled their characteristics. By qualitatively coding the instances, we identified the prominent elements, actions, and purposes of tacit knowledge. To identify which instances have been addressed the least, we conducted a systematic literature review of prior system support to graphic design. By understanding the reasons for the lack of support on these instances based on their characteristics, we propose design guidelines for capturing and applying tacit knowledge in design tools. This work takes a step towards understanding tacit knowledge, and how this knowledge can be communicated.

FoodCensor: Promoting Mindful Digital Food Content Consumption for People with Eating Disorders

CHI'24 Honorable Mention

Ryuhaerang Choi (KAIST); Subin Park (KAIST); Sujin Han (KAIST); Sung-Ju Lee (KAIST)

Digital food content’s popularity is underscored by recent studies revealing its addictive nature and association with disordered eating. Notably, individuals with eating disorders exhibit a positive correlation between their digital food content consumption and disordered eating behaviors. Based on these findings, we introduce FoodCensor, an intervention designed to empower individuals with eating disorders to make informed, conscious, and health-oriented digital food content consumption decisions. FoodCensor (i) monitors and hides passively exposed food content on smartphones and personal computers, and (ii) prompts reflective questions for users when they spontaneously search for food content. We deployed FoodCensor to people with binge eating disorder or bulimia (n=22) for three weeks. Our user study reveals that FoodCensor fostered self-awareness and self-reflection about unconscious digital food content consumption habits, enabling them to adopt healthier behaviors consciously. Furthermore, we discuss design implications for promoting healthier digital content consumption practices for vulnerable populations to specific content types.

Teach AI How to Code: Using Large Language Models as Teachable Agents for Programming Education

CHI'24 Honorable Mention

Hyoungwook Jin (KAIST); Seonghee Lee (Stanford University); Hyungyu Shin (KAIST); Juho Kim (KAIST)

This work investigates large language models (LLMs) as teachable agents for learning by teaching (LBT). LBT with teachable agents helps learners identify knowledge gaps and discover new knowledge. However, teachable agents require expensive programming of subject-specific knowledge. While LLMs as teachable agents can reduce the cost, LLMs’ expansive knowledge as tutees discourages learners from teaching. We propose a prompting pipeline that restrains LLMs’ knowledge and makes them initiate “why” and “how” questions for effective knowledge-building. We combined these techniques into TeachYou, an LBT environment for algorithm learning, and AlgoBo, an LLM-based tutee chatbot that can simulate misconceptions and unawareness prescribed in its knowledge state. Our technical evaluation confirmed that our prompting pipeline can effectively configure AlgoBo’s problem-solving performance. Through a between-subject study with 40 algorithm novices, we also observed that AlgoBo’s questions led to knowledge-dense conversations (effect size=0.71). Lastly, we discuss design implications, cost-efficiency, and personalization of LLM-based teachable agents.

S-ADL: Exploring Smartphone-based Activities of Daily Living to Detect Blood Alcohol Concentration in a Controlled Environment

CHI'24 Honorable Mention

Hansoo Lee (Korea Advanced Institute of Science and Technology); Auk Kim (Kangwon National University); Sang Won Bae (Stevens Institute of Technology); Uichin Lee (KAIST)

In public health and safety, precise detection of blood alcohol concentration (BAC) plays a critical role in implementing responsive interventions that can save lives. While previous research has primarily focused on computer-based or neuropsychological tests for BAC identification, the potential use of daily smartphone activities for BAC detection in real-life scenarios remains largely unexplored. Drawing inspiration from Instrumental Activities of Daily Living (I-ADL), our hypothesis suggests that Smartphone-based Activities of Daily Living (S-ADL) can serve as a viable method for identifying BAC. In our proof-of-concept study, we propose, design, and assess the feasibility of using S-ADLs to detect BAC in a scenario-based controlled laboratory experiment involving 40 young adults. In this study, we identify key S-ADL metrics, such as delayed texting in SMS, site searching, and finance management, that significantly contribute to BAC detection (with an AUC-ROC and accuracy of 81%). We further discuss potential real-life applications of the proposed BAC model.

Reinforcing and Reclaiming The Home: Co-speculating Future Technologies to Support Remote and Hybrid Work

CHI'24 Honorable Mention

Janghee Cho (National University of Singapore); Dasom Choi (KAIST); Junnan Yu (The Hong Kong Polytechnic University); Stephen Voida (University of Colorado Boulder)

With the rise of remote and hybrid work after COVID-19, there is growing interest in understanding remote workers’ experiences and designing digital technology for the future of work within the field of HCI. To gain a holistic understanding of how remote workers navigate the blurred boundary between work and home and how designers can better support their boundary work, we employ humanistic geography as a lens. We engaged in co-speculative design practices with 11 remote workers in the US, exploring how future technologies might sustainably enhance participants’ work and home lives in remote/hybrid arrangements. We present the imagined technologies that resulted from this process, which both reinforce remote workers’ existing boundary work practices through everyday routines/rituals and reclaim the notion of home by fostering independence, joy, and healthy relationships. Our discussions with participants inform implications for designing digital technologies that promote sustainability in the future remote/hybrid work landscape.

Natural Language Dataset Generation Framework for Visualizations Powered by Large Language Models

CHI'24

Kwon Ko (KAIST); Hyeon Jeon (Seoul National University); Gwanmo Park (Seoul National University); Dae Hyun Kim (KAIST); Nam Wook Kim (Boston College); Juho Kim (KAIST); Jinwook Seo (Seoul National University)

We introduce VL2NL, a Large Language Model (LLM) framework that generates rich and diverse NL datasets using Vega-Lite specifications as input, thereby streamlining the development of Natural Language Interfaces (NLIs) for data visualization. To synthesize relevant chart semantics accurately and enhance syntactic diversity in each NL dataset, we leverage 1) a guided discovery incorporated into prompting so that LLMs can steer themselves to create faithful NL datasets in a self-directed manner; 2) a score-based paraphrasing to augment NL syntax along with four language axes. We also present a new collection of 1,981 real-world Vega-Lite specifications that have increased diversity and complexity than existing chart collections. When tested on our chart collection, VL2NL extracted chart semantics and generated L1/L2 captions with 89.4% and 76.0% accuracy, respectively. It also demonstrated generating and paraphrasing utterances and questions with greater diversity compared to the benchmarks. Last, we discuss how our NL datasets and framework can be utilized in real-world scenarios. The codes and chart collection are available at https://github.com/hyungkwonko/chart-llm.

CHI'24

Kongpyung (Justin) Moon (KAIST); Zofia Marciniak (Korea Advanced Institute of Science and Technology); Ryo Suzuki (University of Calgary); Andrea Bianchi (KAIST)

3D printed displays promise to create unique visual interfaces for physical objects. However, current methods for creating 3D printed displays either require specialized post-fabrication processes (e.g., electroluminescence spray and silicon casting) or function as passive elements that simply react to environmental factors (e.g., body and air temperature). These passive displays offer limited control over when, where, and how the colors change. In this paper, we introduce ThermoPixels, a method for designing and 3D printing actively controlled and visually rich thermochromic displays that can be embedded in arbitrary geometries. We investigate the color-changing and thermal properties of thermochromic and conductive filaments. Based on these insights, we designed ThermoPixels and an accompanying software tool that allows embedding ThermoPixels in arbitrary 3D geometries, creating displays of various shapes and sizes (flat, curved, or matrix displays) or displays that embed textures, multiple colors, or that are flexible.

CHI'24

Jeesun Oh (KAIST); Wooseok Kim (KAIST); Sungbae Kim (KAIST); Hyeonjeong Im (KAIST); Sangsu Lee (KAIST)

Proactive voice assistants (VAs) in smart homes predict users’ needs and autonomously take action by controlling smart devices and initiating voice-based features to support users’ various activities. Previous studies on proactive systems have primarily focused on determining action based on contextual information, such as user activities, physiological state, or mobile usage. However, there is a lack of research that considers user agency in VAs’ proactive actions, which empowers users to express their dynamic needs and preferences and promotes a sense of control. Thus, our study aims to explore verbal communication through which VAs can proactively take action while respecting user agency. To delve into communication between a proactive VA and a user, we used the Wizard of Oz method to set up a smart home environment, allowing controllable devices and unrestrained communication. This paper proposes design implications for the communication strategies of proactive VAs that respect user agency.

CreativeConnect: Supporting Reference Recombination for Graphic Design Ideation with Generative AI

CHI'24

DaEun Choi (KAIST); Sumin Hong (Seoul National University of Science and Technology); Jeongeon Park (KAIST); John Joon Young Chung (SpaceCraft Inc.); Juho Kim (KAIST)

Graphic designers often get inspiration through the recombination of references. Our formative study (N=6) reveals that graphic designers focus on conceptual keywords during this process, and want support for discovering the keywords, expanding them, and exploring diverse recombination options of them, while still having room for designers’ creativity. We propose CreativeConnect, a system with generative AI pipelines that helps users discover useful elements from the reference image using keywords, recommends relevant keywords, generates diverse recombination options with user-selected keywords, and shows recombinations as sketches with text descriptions. Our user study (N=16) showed that CreativeConnect helped users discover keywords from the reference and generate multiple ideas based on them, ultimately helping users produce more design ideas with higher self-reported creativity compared to the baseline system without generative pipelines. While CreativeConnect was shown effective in ideation, we discussed how CreativeConnect can be extended to support other types of tasks in creativity support.

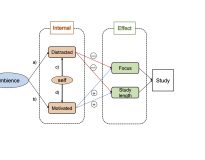

DeepStress: Supporting Stressful Context Sensemaking in Personal Informatics Systems Using a Quasi-experimental Approach

CHI'24

Gyuwon Jung (KAIST); Sangjun Park (KAIST); Uichin Lee (KAIST)

Personal informatics (PI) systems are widely used in various domains such as mental health to provide insights from self-tracking data for behavior change. Users are highly interested in examining relationships from the self-tracking data, but identifying causality is still considered challenging. In this study, we design DeepStress, a PI system that helps users analyze contextual factors causally related to stress. DeepStress leverages a quasi-experimental approach to address potential biases related to confounding factors. To explore the user experience of DeepStress, we conducted a user study and a follow-up diary study using participants’ own self-tracking data collected for 6 weeks. Our results show that DeepStress helps users consider multiple contexts when investigating causalities and use the results to manage their stress in everyday life. We discuss design implications for causality support in PI systems.

DiaryMate: Understanding User Perceptions and Experience in Human-AI Collaboration for Personal Journaling

CHI'24

Taewan Kim (KAIST); Donghoon Shin (University of Washington); Young-Ho Kim (NAVER AI Lab); Hwajung Hong (KAIST)

With their generative capabilities, large language models (LLMs) have transformed the role of technological writing assistants from simple editors to writing collaborators. Such a transition emphasizes the need for understanding user perception and experience, such as balancing user intent and the involvement of LLMs across various writing domains in designing writing assistants. In this study, we delve into the less explored domain of personal writing, focusing on the use of LLMs in introspective activities. Specifically, we designed DiaryMate, a system that assists users in journal writing with LLM. Through a 10-day field study (N=24), we observed that participants used the diverse sentences generated by the LLM to reflect on their past experiences from multiple perspectives. However, we also observed that they are over-relying on the LLM, often prioritizing its emotional expressions over their own. Drawing from these findings, we discuss design considerations when leveraging LLMs in a personal writing practice.

EvalLM: Interactive Evaluation of Large Language Model Prompts on User-Defined Criteria

CHI'24

Tae Soo Kim (KAIST); Yoonjoo Lee (KAIST); Jamin Shin (NAVER AI Lab); Young-Ho Kim (NAVER AI Lab); Juho Kim (KAIST)

By simply composing prompts, developers can prototype novel generative applications with Large Language Models (LLMs). To refine prototypes into products, however, developers must iteratively revise prompts by evaluating outputs to diagnose weaknesses. Formative interviews (N=8) revealed that developers invest significant effort in manually evaluating outputs as they assess context-specific and subjective criteria. We present EvalLM, an interactive system for iteratively refining prompts by evaluating multiple outputs on user-defined criteria. By describing criteria in natural language, users can employ the system’s LLM-based evaluator to get an overview of where prompts excel or fail, and improve these based on the evaluator’s feedback. A comparative study (N=12) showed that EvalLM, when compared to manual evaluation, helped participants compose more diverse criteria, examine twice as many outputs, and reach satisfactory prompts with 59% fewer revisions. Beyond prompts, our work can be extended to augment model evaluation and alignment in specific application contexts.

Exploring Context-Aware Mental Health Self-Tracking Using Multimodal Smart Speakers in Home Environments

CHI'24

Jieun Lim (KAIST); Youngji Koh (KAIST); Auk Kim (Kangwon National University); Uichin Lee (KAIST)

People with mental health issues often stay indoors, reducing their outdoor activities. This situation emphasizes the need for self-tracking technology in homes for mental health research, offering insights into their daily lives and potentially improving care. This study leverages a multimodal smart speaker to design a proactive self-tracking research system that delivers mental health surveys using an experience sampling method (ESM). Our system determines ESM delivery timing by detecting user context transitions and allowing users to answer surveys through voice dialogues or touch interactions. Furthermore, we explored the user experience of a proactive self-tracking system by conducting a four-week field study (n=20). Our results show that context transition-based ESM delivery can increase user compliance. Participants preferred touch interactions to voice commands, and the modality selection varied depending on the user’s immediate activity context. We explored the design implications for home-based, context-aware self-tracking with multimodal speakers, focusing on practical applications.

FLUID-IoT : Flexible and Fine-Grained Access Control in Shared IoT Environments via Multi-user UI Distribution

CHI'24

Sunjae Lee (KAIST); Minwoo Jeong (KAIST); Daye Song (KAIST); Junyoung Choi (KAIST); Seoyun Son (KAIST); Jean Y Song (DGIST); Insik Shin (KAIST)

The rapid growth of the Internet of Things (IoT) in shared spaces has led to an increasing demand for sharing IoT devices among multiple users. Yet, existing IoT platforms often fall short by offering an all-or-nothing approach to access control, not only posing security risks but also inhibiting the growth of the shared IoT ecosystem. This paper introduces FLUID-IoT, a framework that enables flexible and granular multi-user access control, even down to the User Interface (UI) component level. Leveraging a multi-user UI distribution technique, FLUID-IoT transforms existing IoT apps into centralized hubs that selectively distribute UI components to users based on their permission levels. Our performance evaluation, encompassing coverage, latency, and memory consumption, affirm that FLUID-IoT can be seamlessly integrated with existing IoT platforms and offers adequate performance for daily IoT scenarios. An in-lab user study further supports that the framework is intuitive and user-friendly, requiring minimal training for efficient utilization.

GenQuery: Supporting Expressive Visual Search with Generative Models

CHI'24

Kihoon Son (KAIST); DaEun Choi (KAIST); Tae Soo Kim (KAIST); Young-Ho Kim (NAVER AI Lab); Juho Kim (KAIST)

Designers rely on visual search to explore and develop ideas in early design stages. However, designers can struggle to identify suitable text queries to initiate a search or to discover images for similarity-based search that can adequately express their intent. We propose GenQuery, a novel system that integrates generative models into the visual search process. GenQuery can automatically elaborate on users’ queries and surface concrete search directions when users only have abstract ideas. To support precise expression of search intents, the system enables users to generatively modify images and use these in similarity-based search. In a comparative user study (N=16), designers felt that they could more accurately express their intents and find more satisfactory outcomes with GenQuery compared to a tool without generative features. Furthermore, the unpredictability of generations allowed participants to uncover more diverse outcomes. By supporting both convergence and divergence, GenQuery led to a more creative experience.

Investigating the Design of Augmented Narrative Spaces Through Virtual-Real Connections: A Systematic Literature Review

CHI'24

Jae-eun Shin (KAIST); Hayun Kim (KAIST); HYERIM PARK (KAIST); Woontack Woo (KAIST, KAIST )

Augmented Reality (AR) is regarded as an innovative storytelling medium that presents novel experiences by layering a virtual narrative space over a real 3D space. However, understanding of how the virtual narrative space and the real space are connected with one another in the design of augmented narrative spaces has been limited. For this, we conducted a systematic literature review of 64 articles featuring AR storytelling applications and systems in HCI, AR, and MR research. We investigated how virtual narrative spaces have been paired, functionalized, placed, and registered in relation to the real spaces they target. Based on these connections, we identified eight dominant types of augmented narrative spaces that are primarily categorized by whether they virtually narrativize reality or realize the virtual narrative. We discuss our findings to propose design recommendations on how virtual-real connections can be incorporated into a more structured approach to AR storytelling.

Investigating the Potential of Group Recommendation Systems As a Medium of Social Interactions: A Case of Spotify Blend Experiences between Two Users

CHI'24

Daehyun Kwak (KAIST); Soobin Park (KAIST); Inha Cha (Georgia Institute of Technology); Hankyung Kim (KAIST); Youn-kyung Lim (KAIST)

Designing user experiences for group recommendation systems (GRS) is challenging, requiring a nuanced understanding of the influence of social interactions between users. Using Spotify Blend as a real-world case of music GRS, we conducted empirical studies to investigate intricate social interactions among South Korean users in GRS. Through a preliminary survey about Blend experiences in general, we narrowed the focus for the main study to relationships between two users who are acquainted or close. Building on this, we conducted a 21-day diary study and interviews with 30 participants (15 pairs) to probe more in-depth interpersonal dynamics within Blend. Our findings reveal that users engaged in implicit social interactions, including tacit understanding of their companions and indirect communication. We conclude by discussing the newly discovered value of GRS as a social catalyst, along with design attributes and challenges for the social experiences it mediates.

MindfulDiary: Harnessing Large Language Model to Support Psychiatric Patients' Journaling

CHI'24

Taewan Kim (KAIST); Seolyeong Bae (Gwangju Institute of Science and Technology); Hyun AH Kim (NAVER Cloud); Su-woo Lee (Wonkwang university hospital); Hwajung Hong (KAIST); Chanmo Yang (Wonkwang University Hospital, Wonkwang University); Young-Ho Kim (NAVER AI Lab)

Large Language Models (LLMs) offer promising opportunities in mental health domains, although their inherent complexity and low controllability elicit concern regarding their applicability in clinical settings. We present MindfulDiary, an LLM-driven journaling app that helps psychiatric patients document daily experiences through conversation. Designed in collaboration with mental health professionals, MindfulDiary takes a state-based approach to safely comply with the experts’ guidelines while carrying on free-form conversations. Through a four-week field study involving 28 patients with major depressive disorder and five psychiatrists, we examined how MindfulDiary facilitates patients’ journaling practice and clinical care. The study revealed that MindfulDiary supported patients in consistently enriching their daily records and helped clinicians better empathize with their patients through an understanding of their thoughts and daily contexts. Drawing on these findings, we discuss the implications of leveraging LLMs in the mental health domain, bridging the technical feasibility and their integration into clinical settings.

Natural Language Dataset Generation Framework for Visualizations Powered by Large Language Models

CHI'24

Kwon Ko (KAIST); Hyeon Jeon (Seoul National University); Gwanmo Park (Seoul National University); Dae Hyun Kim (KAIST); Nam Wook Kim (Boston College); Juho Kim (KAIST); Jinwook Seo (Seoul National University)

We introduce VL2NL, a Large Language Model (LLM) framework that generates rich and diverse NL datasets using Vega-Lite specifications as input, thereby streamlining the development of Natural Language Interfaces (NLIs) for data visualization. To synthesize relevant chart semantics accurately and enhance syntactic diversity in each NL dataset, we leverage 1) a guided discovery incorporated into prompting so that LLMs can steer themselves to create faithful NL datasets in a self-directed manner; 2) a score-based paraphrasing to augment NL syntax along with four language axes. We also present a new collection of 1,981 real-world Vega-Lite specifications that have increased diversity and complexity than existing chart collections. When tested on our chart collection, VL2NL extracted chart semantics and generated L1/L2 captions with 89.4% and 76.0% accuracy, respectively. It also demonstrated generating and paraphrasing utterances and questions with greater diversity compared to the benchmarks. Last, we discuss how our NL datasets and framework can be utilized in real-world scenarios. The codes and chart collection are available at https://github.com/hyungkwonko/chart-llm.

Navigating User-System Gaps: Understanding User-Interactions in User-Centric Context-Aware Systems for Digital Well-being Intervention

CHI'24

Inyeop Kim (KAIST); Uichin Lee (KAIST)

In this paper, we investigate the challenges users face with a user-centric context-aware intervention system. Users often face gaps when the system’s responses do not align with their goals and intentions. We explore these gaps through a prototype system that enables users to specify context-action intervention rules as they desire. We conducted a lab study to understand how users perceive and cope with gaps while translating their intentions as rules, revealing that users experience context-mapping and context-recognition uncertainties (instant evaluation cycle). We also performed a field study to explore how users perceive gaps and make adaptations of rules when the operation of specified rules in real-world settings (delayed evaluation cycle). This research highlights the dynamic nature of user interaction with context-aware systems and suggests the potential of such systems in supporting digital well-being. It provides insights into user adaptation processes and offers guidance for designing user-centric context-aware applications.

PaperWeaver: Enriching Topical Paper Alerts by Contextualizing Recommended Papers with User-collected Papers

CHI'24

Yoonjoo Lee (KAIST); Hyeonsu B Kang (Carnegie Mellon University); Matt Latzke (Allen Institute for AI); Juho Kim (KAIST); Jonathan Bragg (Allen Institute for Artificial Intelligence); Joseph Chee Chang (Allen Institute for AI); Pao Siangliulue (Allen Institute for AI)

With the rapid growth of scholarly archives, researchers subscribe to “paper alert” systems that periodically provide them with recommendations of recently published papers that are similar to previously collected papers. However, researchers sometimes struggle to make sense of nuanced connections between recommended papers and their own research context, as existing systems only present paper titles and abstracts. To help researchers spot these connections, we present PaperWeaver, an enriched paper alerts system that provides contextualized text descriptions of recommended papers based on user-collected papers. PaperWeaver employs a computational method based on Large Language Models (LLMs) to infer users’ research interests from their collected papers, extract context-specific aspects of papers, and compare recommended and collected papers on these aspects. Our user study (N=15) showed that participants using PaperWeaver were able to better understand the relevance of recommended papers and triage them more confidently when compared to a baseline that presented the related work sections from recommended papers.

PriviAware: Exploring Data Visualization and Dynamic Privacy Control Support for Data Collection in Mobile Sensing Research

CHI'24

Hyunsoo Lee (KAIST); Yugyeong Jung (KAIST); Hei Yiu Law (Korea Advanced Institute of Science and Technology); Seolyeong Bae (Gwangju Institute of Science and Technology); Uichin Lee (KAIST)

With increased interest in leveraging personal data collected from 24/7 mobile sensing for digital healthcare research, supporting user-friendly consent to data collection for user privacy has also become important. This work proposes \emph{PriviAware}, a mobile app that promotes flexible user consent to data collection with data exploration and contextual filters that enable users to turn off data collection based on time and places that are considered privacy-sensitive. We conducted a user study (N = 58) to explore how users leverage data exploration and contextual filter functions to explore and manage their data and whether our system design helped users mitigate their privacy concerns. Our findings indicate that offering fine-grained control is a promising approach to raising users’ privacy awareness under the dynamic nature of the pervasive sensing context. We provide practical privacy-by-design guidelines for mobile sensing research.

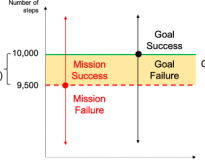

Time2Stop: Adaptive and Explainable Human-AI Loop for Smartphone Overuse Intervention

CHI'24

Adiba Orzikulova (KAIST); Han Xiao (Beijing University of Posts and Telecommunications); Zhipeng Li (Department of Computer Science and Technology, Tsinghua University); Yukang Yan (Carnegie Mellon University); Yuntao Wang (Tsinghua University); Yuanchun Shi (Tsinghua University); Marzyeh Ghassemi (MIT); Sung-Ju Lee (KAIST); Anind K Dey (University of Washington); Xuhai “Orson” Xu (Massachusetts Institute of Technology, University of Washington)

Despite a rich history of investigating smartphone overuse intervention techniques, AI-based just-in-time adaptive intervention (JITAI) methods for overuse reduction are lacking. We develop Time2Stop, an intelligent, adaptive, and explainable JITAI system that leverages machine learning to identify optimal intervention timings, introduces interventions with transparent AI explanations, and collects user feedback to establish a human-AI loop and adapt the intervention model over time. We conducted an 8-week field experiment (N=71) to evaluate the effectiveness of both the adaptation and explanation aspects of Time2Stop. Our results indicate that our adaptive models significantly outperform the baseline methods on intervention accuracy (>32.8% relatively) and receptivity (>8.0%). In addition, incorporating explanations further enhances the effectiveness by 53.8% and 11.4% on accuracy and receptivity, respectively. Moreover, Time2Stop significantly reduces overuse, decreasing app visit frequency by 7.0∼8.9%. Our subjective data also echoed these quantitative measures. Participants preferred the adaptive interventions and rated the system highly on intervention time accuracy, effectiveness, and level of trust. We envision our work can inspire future research on JITAI systems with a human-AI loop to evolve with users.

Unlock Life with a Chat(GPT): Integrating Conversational AI with Large Language Models into Everyday Lives of Autistic Individuals

CHI'24

Dasom Choi (KAIST); Sunok Lee (KAIST); Sung-In Kim (Seoul National University Hospital); Kyungah Lee (Daegu University); Hee Jeong Yoo (Seoul National University Bundang Hospital); Sangsu Lee (KAIST); Hwajung Hong (KAIST)

Autistic individuals often draw on insights from their supportive networks to develop self-help life strategies ranging from everyday chores to social activities. However, human resources may not always be immediately available. Recently emerging conversational agents (CAs) that leverage large language models (LLMs) have the potential to serve as powerful information-seeking tools, facilitating autistic individuals to tackle daily concerns independently. This study explored the opportunities and challenges of LLM-driven CAs in empowering autistic individuals through focus group interviews and workshops (N=14). We found that autistic individuals expected LLM-driven CAs to offer a non-judgmental space, encouraging them to approach day-to-day issues proactively. However, they raised issues regarding critically digesting the CA responses and disclosing their autistic characteristics. Based on these findings, we propose approaches that place autistic individuals at the center of shaping the meaning and role of LLM-driven CAs in their lives, while preserving their unique needs and characteristics.

User Performance in Consecutive Temporal Pointing: An Exploratory Study

CHI'24

Dawon Lee (KAIST); Sunjun Kim (Daegu Gyeongbuk Institute of Science and Technology (DGIST)); Junyong Noh (KAIST); Byungjoo Lee (Yonsei University)

A significant amount of research has recently been conducted on user performance in so-called temporal pointing tasks, in which a user is required to perform a button input at the timing required by the system. Consecutive temporal pointing (CTP), in which two consecutive button inputs must be performed while satisfying temporal constraints, is common in modern interactions, yet little is understood about user performance on the task. Through a user study involving 100 participants, we broadly explore user performance in a variety of CTP scenarios. The key finding is that CTP is a unique task that cannot be considered as two ordinary temporal pointing processes. Significant effects of button input method, motor limitations, and different hand use were also observed.

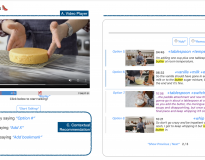

VIVID: Human-AI Collaborative Authoring of Vicarious Dialogues from Lecture Videos

CHI'24

Seulgi Choi (KAIST); Hyewon Lee (KAIST); Yoonjoo Lee (KAIST); Juho Kim (KAIST)

The lengthy monologue-style online lectures cause learners to lose engagement easily. Designing lectures in a “vicarious dialogue” format can foster learners’ cognitive activities more than monologue-style. However, designing online lectures in a dialogue style catered to the diverse needs of learners is laborious for instructors. We conducted a design workshop with eight educational experts and seven instructors to present key guidelines and the potential use of large language models (LLM) to transform a monologue lecture script into pedagogically meaningful dialogue. Applying these design guidelines, we created VIVID which allows instructors to collaborate with LLMs to design, evaluate, and modify pedagogical dialogues. In a within-subjects study with instructors (N=12), we show that VIVID helped instructors select and revise dialogues efficiently, thereby supporting the authoring of quality dialogues. Our findings demonstrate the potential of LLMs to assist instructors with creating high-quality educational dialogues across various learning stages.

Viewer2Explorer: Designing a Map Interface for Spatial Navigation in Linear 360 Museum Exhibition Video

CHI'24

Chaeeun Lee (KAIST); Jinwook Kim (KAIST); HyeonBeom Yi (KAIST); Woohun Lee (KAIST)

The pandemic has contributed to the increased digital content development for remote experiences. Notably, museums have begun creating virtual exhibitions using 360-videos, providing a sense of presence and high level of immersion. However, 360-video content often uses a linear timeline interface that requires viewers to follow the path decided by the video creators. This format limits viewers’ ability to actively engage with and explore the virtual space independently. Therefore, we designed a map-based video interface, Viewer2Explorer, that enables the user to perceive and explore virtual spaces autonomously. We then conducted a study to compare the overall experience between the existing linear timeline and map interfaces. Viewer2Explorer enhanced users’ spatial controllability and enabled active exploration in virtual museum exhibition spaces. Additionally, based on our map interface, we discuss a new type of immersion and assisted autonomy that can be experienced through a 360-video interface and provide design insights for future content.

A Design Space for Intelligent and Interactive Writing Assistants

CHI'24

Mina Lee (Microsoft Research); Katy Ilonka Gero (Harvard University); John Joon Young Chung (Midjourney); Simon Buckingham Shum (University of Technology Sydney); Vipul Raheja (Grammarly); Hua Shen (University of Michigan); Subhashini Venugopalan (Google); Thiemo Wambsganss (Bern University of Applied Sciences); David Zhou (University of Illinois Urbana-Champaign); Emad A. Alghamdi (King Abdulaziz University); Tal August (University of Washington); Avinash Bhat (McGill University); Madiha Zahrah Choksi (Cornell Tech); Senjuti Dutta (University of Tennessee, Knoxville); Jin L.C. Guo (McGill University); Md Naimul Hoque (University of Maryland); Yewon Kim (KAIST); Simon Knight (University of Technology Sydney); Seyed Parsa Neshaei (EPFL); Antonette Shibani (University of Technology Sydney); Disha Shrivastava (Google DeepMind); Lila Shroff (Stanford University); Agnia Sergeyuk (JetBrains Research); Jessi Stark (University of Toronto); Sarah Sterman (University of Illinois, Urbana-Champaign); Sitong Wang (Columbia University); Antoine Bosselut (EPFL); Daniel Buschek (University of Bayreuth); Joseph Chee Chang (Allen Institute for AI); Sherol Chen (Google); Max Kreminski (Midjourney); Joonsuk Park (University of Richmond); Roy Pea (Stanford University); Eugenia H Rho (Virginia Tech); Zejiang Shen (Massachusetts Institute of Technology); Pao Siangliulue (B12)

In our era of rapid technological advancement, the research landscape for writing assistants has become increasingly fragmented across various research communities. We seek to address this challenge by proposing a design space as a structured way to examine and explore the multidimensional space of intelligent and interactive writing assistants. Through community collaboration, we explore five aspects of writing assistants: task, user, technology, interaction, and ecosystem. Within each aspect, we define dimensions and codes by systematically reviewing 115 papers while leveraging the expertise of researchers in various disciplines. Our design space aims to offer researchers and designers a practical tool to navigate, comprehend, and compare the various possibilities of writing assistants, and aid in the design of new writing assistants.

Reconfigurable Interfaces by Shape Change and Embedded Magnets

CHI'24

Himani Deshpande (Texas A&M University); Bo Han (National University of Singapore); Kongpyung (Justin) Moon (KAIST); Andrea Bianchi (KAIST); Clement Zheng (National University of Singapore); Jeeeun Kim (Texas A&M University)

Reconfigurable physical interfaces empower users to swiftly adapt to tailored design requirements or preferences. Shape-changing interfaces enable such reconfigurability, avoiding the cost of refabrication or part replacements. Nonetheless, reconfigurable interfaces are often bulky, expensive, or inaccessible. We propose a reversible shape-changing mechanism that enables reconfigurable 3D printed structures via translations and rotations of parts. We investigate fabrication techniques that enable reconfiguration using magnets and the thermoplasticity of heated polymer. Proposed interfaces achieve tunable haptic feedback and adjustment of different user affordances by reconfiguring input motions. The design space is demonstrated through applications in rehabilitation, embodied communication, accessibility, safety, and gaming.

Interrupting for Microlearning: Understanding Perceptions and Interruptibility of Proactive Conversational Microlearning Services

CHI'24

Minyeong Kim (Kangwon National University); Jiwook Lee (Kangwon National University); Youngji Koh (Korea Advanced Institute of Science and Technology); Chanhee Lee (KAIST); Uichin Lee (KAIST); Auk Kim (Kangwon National University)

Significant investment of time and effort for language learning has prompted a growing interest in microlearning. While microlearning requires frequent participation in 3-to-10-minute learning sessions, the recent widespread of smart speakers in homes presents an opportunity to expand learning opportunities by proactively providing microlearning in daily life. However, such proactive provision can distract users. Despite the extensive research on proactive smart speakers and their opportune moments for proactive interactions, our understanding of opportune moments for more-than-one-minute interactions remains limited. This study aims to understand user perceptions and opportune moments for more-than-one-minute microlearning using proactive smart speakers at home. We first developed a proactive microlearning service through six pilot studies (n=29), and then conducted a three-week field study (n=28). We identified the key contextual factors relevant to opportune moments for microlearning of various durations, and discussed the design implications for proactive conversational microlearning services at home.

Your Avatar Seems Hesitant to Share About Yourself: How People Perceive Others' Avatars in the Transparent System

CHI'24

Yeonju Jang (Cornell University); Taenyun Kim (Michigan State University); Huisung Kwon (KAIST); Hyemin Park (Sungkyunkwan University); Ki Joon Kim (City University of Hong Kong)

In avatar-mediated communications, users often cannot identify how others’ avatars are created, which is one of the important information they need to evaluate others. Thus, we tested a social virtual world that is transparent about others’ avatar-creation methods and investigated how knowing about others’ avatar-creation methods shapes users’ perceptions of others and their self-disclosure. We conducted a 2×2 mixed-design experiment with system design (nontransparent vs. transparent system) as a between-subjects and avatar-creation method (customized vs. personalized avatar) as a within-subjects variable with 60 participants. The results revealed that personalized avatars in the transparent system were viewed less positively than customized avatars in the transparent system or avatars in the nontransparent system. These avatars appeared less comfortable and honest in their self-disclosure and less competent. Interestingly, avatars in the nontransparent system attracted more followers. Our results suggest being cautious when creating a social virtual world that discloses the avatar-creation process.

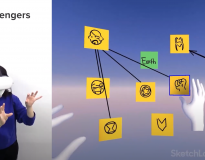

Interactivity

CHI'24

Sang Ho Yoon (KAIST); Youjin Sung (KAIST); Kun Woo Song (KAIST); Kyungeun Jung (KAIST ); Kyungjin Seo (KAIST); Jina Kim (KAIST); Yi Hyung Il (KAIST); Nicha Vanichvoranun (Korea Advanced Institute of Science and Technology(KAIST)); Hanseok Jeong (Korea Advanced Institute of Science and Technology); Hojeong Lee (KAIST)

In this Interactivity, we present a lab demo on adaptive and immersive wearable interfaces that enhance extended reality (XR) interactions. Advances in wearable hardware with state-of-the-art software support have great potential to promote highly adaptive sensing and immersive haptic feedback for enhanced user experiences. Our research projects focus on novel sensing techniques, innovative hardware/devices, and realistic haptic rendering to achieve these goals. Ultimately, our work aims to improve the user experience in XR by overcoming the limitations of existing input control and haptic feedback. Our lab demo features three highly enhanced experiences with wearable interfaces. First, we present novel sensing techniques that enable a more precise understanding of user intent and status, enriched with a broader context. Then, we showcase innovative haptic devices and authoring toolkits that leverage the captured user intent and status. Lastly, we demonstrate immersive haptic rendering with body-based wearables that enhance the user experience.

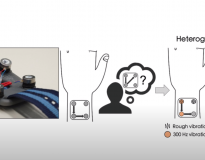

STButton: Exploring Opportunities for Buttons with Spatio-Temporal Tactile Output

CHI'24

Yeonsu Kim (KAIST); Jisu Yim (KAIST); JaeHyun Kim (KAIST); Kyunghwan Kim (KAIST); Geehyuk Lee (School of Computing, KAIST)

We present STButton, a physical button with a high-resolution spatio-temporal tactile feedback surface. The 5 x 8 pin array tactile display size of 20mm x 28mm enables buttons to express various types of information, such as value with the number of raised pins, direction with the location of raised pins, and duration of time with blinking animation. With a highly expressive tactile surface, the button can seamlessly transfer assistive feedforward and feedback during spontaneous button interaction, such as touching to locate the button or applying gradual pressure to press the button. In the demonstration, attendees experience five scenarios of button interaction: the seat heater button on a car, the volume control button on a remote controller, the power button on a laptop, the menu button on a VR controller, and the play button on a game controller. In each scenario, the representative role of tactile feedback is configured differently, allowing attendees to experience the rich interaction space and potential benefits of STButton. Early accessed attendees appreciated the unique opportunity to transfer information with a highly expressive tactile surface and emphasized that STButton adds a tangible layer to the user experience, enhancing emotional and sensory engagement.

EMPop: Pin Based Electromagnetic Actuation for Projection Mapping

CHI'24

Sungbaek Kim (Graduate School of Culture Technology, KAIST); Doyo Choi (Graduate School of Culture Technology, KAIST); Jinjoon Lee (KAIST)

As interactive media arts evolve, there is a growing demand for technologies that offer multisensory experiences beyond audiovisual elements in large-scale projection mapping exhibitions. However, traditional methods of providing tactile feedback are impractical in expansive settings due to their bulk and complexity. The EMPop system is the proposed solution, utilizing a straightforward design of electromagnets and permanent magnets making projection mapping more interactive and engaging. Our system is designed to control three permanent magnets individually with one electromagnet by adjusting the current of the electromagnet, reliable and scalable. We assessed its ability to convey directions and the strength of feedback, finding that users correctly identified directions and differentiated feedback intensity levels. Participants enjoyed the realistic and engaging experience, suggesting EMPop’s potential for enriching interactive installations in museums and galleries.

Late-Breaking Work

Towards the Safety of Film Viewers from Sensitive Content: Advancing Traditional Content Warnings on Film Streaming Services

CHI'24

Soyeong Min (KAIST); Minha Lee (KAIST); Sangsu Lee (KAIST)

Traditional content warnings on film streaming services are limited to warnings in the form of text or pictograms that only offer broad categorizations at the start for a few seconds. This method does not provide details on the timing and intensity of sensitive scenes. To explore the potential for improving content warnings, we investigated users’ perceptions of the current system and their expectations for a new content warning system. This was achieved through participatory design workshops involving 11 participants. We found users’ expectations in three aspects: 1) develop a more nuanced understanding of their personal sensitivities beyond content sensitivities, 2) enable a trigger-centric film exploration process, and 3) allow for predictions regarding the timing of scenes and mitigating the intensity of sensitive content. Our study initiates a preliminary exploration of advanced content warnings, incorporating users’ specific expectations and creative ideas, with the goal of fostering safer viewing experiences.

MOJI: Enhancing Emoji Search System with Query Expansions and Emoji Recommendations

CHI'24

Yoo Jin Hong (KAIST); Hye Soo Park (KAIST); Eunki Joung (KAIST); Jihyeong Hong (KAIST)

The text-based emoji search, despite its widespread use and extensive variety of emojis, has received limited attention in terms of understanding user challenges and identifying ways to support users. In our formative study, we found the bottlenecks in text-based emoji searches, focusing on challenges in finding appropriate search keywords and user modification strategies for unsatisfying searches. Building on these findings, we introduce MOJI, an emoji entry system supporting 1) query expansion with content-relevant multi-dimensional keywords reflecting users’ modification strategies and 2) emoji recommendations that belong to each search query. The comparison study demonstrated that our system reduced the time required to finalize search keywords compared to traditional text-based methods. Additionally, users achieved higher satisfaction in final emoji selections through easy attempts and modifications on search queries, without increasing the overall selection time. We also present a comparison of emoji suggestion algorithms (GPT and iOS) to support query expansion.

Supporting Interpersonal Emotion Regulation of Call Center Workers via Customer Voice Modulation

CHI'24

Duri Lee (KAIST); Kyungmin Nam (Delft University of Technology (TU Delft)); Uichin Lee (KAIST)

Call center workers suffer from the aggressive voices of customers. In this study, we explore the possibility of proactive voice modulation or style transfer, in which a customer’s voice can be modified in real time to mitigate emotional contagion. As a preliminary study, we conducted an interview with call center workers and performed a scenario-based user study to evaluate the effects of voice modulation on perceived stress and emotion. We transformed the customer’s voice by modulating its pitch and found its potential value for designing a user interface for proactive voice modulation. We provide new insights into interface design for proactively supporting call center workers during emotionally stressful conversations.

Understanding Visual, Integrated, and Flexible Workspace for Comprehensive Literature Reviews with SketchingRelatedWork

CHI'24

Donghyeok Ma (KAIST); Joon Hyub Lee (KAIST); Seok-Hyung Bae (KAIST)

Writing an academic paper requires significant time and effort to find, read, and organize many related papers, which are complex knowledge tasks. We present a novel interactive system that allows users to perform these tasks quickly and easily on the 2D canvas with pen and multitouch inputs, turning users’ sketches and handwriting into node-link diagrams of papers and citations that users can iteratively expand in situ toward constructing a coherent narrative when writing Related Work sections. Through a pilot study involving researchers experienced in publishing academic papers, we show that our system can serve as a visual, integrated, and flexible workspace for conducting comprehensive literature reviews.

Unveiling the Inherent Needs: GPT Builder as Participatory Design Tool for Exploring Needs and Expectation of AI with Middle-Aged Users

CHI'24

Huisung Kwon (KAIST); Yunjae Josephine Choi (KAIST); Sunok Lee (Aalto University); Sangsu Lee (KAIST)

A generative session that directly involves users in the design process is an effective way to design user-centered experiences by uncovering intrinsic needs. However, engaging users who lack coding knowledge in AI system design poses significant challenges. Recognizing this, the recently revealed GPT-creating tool, which allows users to customize ChatGPT through simple dialog interactions, is a promising solution. We aimed to identify the possibility of using this tool to uncover intrinsic users’ needs and expectations towards AI. We conducted individual participatory design workshops with generative sessions focusing on middle-aged individuals. This approach helped us to delve into the latent needs and expectations of conversational AI among this demographic. We discovered a wide range of unexpressed needs and expectations for AI among them. Our research highlights the potential and value of using the GPT-creating tool as a design method, particularly for revealing the users’ unexpressed needs and expectations.

DirActor: Creating Interaction Illustrations by Oneself through Directing and Acting Simultaneously in VR

CHI'24

Seung-Jun Lee (KAIST); Siripon Sutthiwanna (KAIST); Joon Hyub Lee (KAIST); Seok-Hyung Bae (KAIST)

In HCI research papers, interaction illustrations are essential to vividly expressing user scenarios arising from novel interactions. However, creating these illustrations through drawing or photography can be challenging, especially when they involve human figures. In this study, we propose the DirActor system that helps researchers create interaction illustrations in VR that can be used as-is or post-processed, by becoming both the director and the actor simultaneously. We reproduced interaction illustrations from past ACM CHI Best and Honorable Mention papers using the proposed system to showcase its usefulness and versatility.

Supporting Novice Researchers to Write Literature Review using Language Models

CHI'24

Kiroong Choe (Seoul National University); Seokhyeon Park (Seoul National University); Seokweon Jung (Seoul National University); Hyeok Kim (Northwestern University); Ji Won Yang (Seoul National University); Hwajung Hong (KAIST); Jinwook Seo (Seoul National University)

A literature review requires more than summarization. While language model-based services and systems increasingly assist in analyzing accurate content in papers, their role in supporting novice researchers to develop independent perspectives on literature remains underexplored. We propose the design and evaluation of a system that supports the writing of argumentative narratives from literature. Based on the barriers faced by novice researchers before, during, and after writing, identified through semi-structured interviews, we propose a prototype of a language-model-assisted academic writing system that scaffolds the literature review writing process. A series of workshop studies revealed that novice researchers found the support valuable as they could initiate writing, co-create satisfying contents, and develop agency and confidence through a long-term dynamic partnership with the AI.

Bimanual Interactions for Surfacing Curve Networks in VR

CHI'24

Sang-Hyun Lee (KAIST); Joon Hyub Lee (KAIST); Seok-Hyung Bae (KAIST)

We propose an interactive system for authoring 3D curve and surface networks using bimanual interactions in virtual reality (VR) inspired by physical wire bending and film wrapping. In our system, the user can intuitively author 3D shapes by performing a rich vocabulary of interactions arising from a minimal gesture grammar based on hand poses and firmness of hand poses for constraint definition and object manipulation. Through a pilot test, we found that the user can quickly and easily learn and use our system and become immersed in 3D shape authoring.

VR-SSVEPeripheral: Designing Virtual Reality Friendly SSVEP Stimuli using Peripheral Vision Area for Immersive and Comfortable Experience

CHI'24

Jinwook Kim (KAIST); Taesu Kim (KAIST); Jeongmi Lee (KAIST)

Recent VR HMDs embed various bio-sensors (e.g., EEG, eye-tracker) to expand the interaction space. Steady-state visual evoked potential (SSVEP) is one of the most utilized methods in BCI, and recent studies are attempting to design novel VR interactions with it. However, most of them suffer from usability issues, as SSVEP uses flickering stimuli to detect target brain signals that could cause eye fatigue. Also, conventional SSVEP stimuli are not tailored to VR, taking the same form as in a 2D environment. Thus, we propose VR-friendly SSVEP stimuli that utilize the peripheral, instead of the central, vision area in HMD. We conducted an offline experiment to verify our design (n=20). The results indicated that VR-SSVEPeripheral was more comfortable than the conventional one (Central) and functional for augmenting synchronized brain signals for SSVEP detection. This study provides a foundation for designing a VR-suitable SSVEP system and guidelines for utilizing it.

Special Interest Group

A SIG on Understanding and Overcoming Barriers in Establishing HCI Degree Programs in Asia

CHI'24

Zhicong Lu (City University of Hong Kong); Ian Oakley (KAIST); Chat Wacharamanotham (Independent researcher)

Despite a high demand for HCI education, Asia’s academic landscape has a limited number of dedicated HCI programs. This situation leads to a brain drain and impedes the creation of regional HCI centers of excellence and local HCI knowledge. This SIG aims to gather stakeholders related to this problem to clarify and articulate its facets and explore potential solutions. The discussions and insights gained from this SIG will provide valuable input for the Asia SIGCHI Committee and other organizations in their endeavors to promote and expand HCI education across Asia. Furthermore, the findings and strategies identified can also serve as valuable insights for other communities in the Global South facing similar challenges. By fostering a comprehensive understanding of the barriers and brainstorming effective mitigating strategies, this SIG aims to catalyze the growth of HCI programs in Asia and beyond.

Workshop

Workshop on Building a Metaverse for All: Opportunities and Challenges for Future Inclusive and Accessible Virtual Environments

CHI'24

Callum Parker (University of Sydney), Soojeong Yoo (University College London), Joel Fredericks (The University of Sydney), Tram Thi Minh Tran (University of Sydney), Julie R. Williamson (University of Glasgow), Youngho Lee (Mokpo National University), Woontack Woo (KAIST)

The recent advancements in Large Language Models (LLMs) have significantly impacted numerous, and will impact more, real-world applications. However, these models also pose significant risks to individuals and society. To mitigate these issues and guide future model development, responsible evaluation and auditing of LLMs are essential. This workshop aims to address the current “evaluation crisis” in LLM research and practice by bringing together HCI and AI researchers and practitioners to rethink LLM evaluation and auditing from a human-centered perspective. The HEAL workshop will explore topics around understanding stakeholders’ needs and goals with evaluation and auditing LLMs, establishing human-centered evaluation and auditing methods, developing tools and resources to support these methods, building community and fostering collaboration. By soliciting papers, organizing invited keynote and panel, and facilitating group discussions, this workshop aims to develop a future research agenda for addressing the challenges in LLM evaluation and auditing.

Human-Centered Evaluation and Auditing of Language Models

CHI'24

Ziang Xiao (Johns Hopkins University, Microsoft Research); Wesley Hanwen Deng (Carnegie Mellon University); Michelle S. Lam (Stanford University); Motahhare Eslami (Carnegie Mellon University); Juho Kim (KAIST); Mina Lee (Microsoft Research); Q. Vera Liao (Microsoft Research)

The recent advancements in Large Language Models (LLMs) have significantly impacted numerous, and will impact more, real-world applications. However, these models also pose significant risks to individuals and society. To mitigate these issues and guide future model development, responsible evaluation and auditing of LLMs are essential. This workshop aims to address the current “evaluation crisis” in LLM research and practice by bringing together HCI and AI researchers and practitioners to rethink LLM evaluation and auditing from a human-centered perspective. The HEAL workshop will explore topics around understanding stakeholders’ needs and goals with evaluation and auditing LLMs, establishing human-centered evaluation and auditing methods, developing tools and resources to support these methods, building community and fostering collaboration. By soliciting papers, organizing invited keynote and panel, and facilitating group discussions, this workshop aims to develop a future research agenda for addressing the challenges in LLM evaluation and auditing.

Video Showcase

Design Exploration of Robotic In-Car Accessories for Semi-Autonomous Vehicles

CHI'24

Max Fischer (The University of Tokyo); Jongik Jeon (KAIST); Seunghwa Pyo (KAIST); Shota Kiuchi (The University of Tokyo); Kumi Oda (The University of Tokyo); Kentaro Honma (The University of Tokyo); Miles Pennington (The University of Tokyo); Hyunjung Kim (The University of Tokyo)

The recent advancements in Large Language Models (LLMs) have significantly impacted numerous, and will impact more, real-world applications. However, these models also pose significant risks to individuals and society. To mitigate these issues and guide future model development, responsible evaluation and auditing of LLMs are essential. This workshop aims to address the current “evaluation crisis” in LLM research and practice by bringing together HCI and AI researchers and practitioners to rethink LLM evaluation and auditing from a human-centered perspective. The HEAL workshop will explore topics around understanding stakeholders’ needs and goals with evaluation and auditing LLMs, establishing human-centered evaluation and auditing methods, developing tools and resources to support these methods, building community and fostering collaboration. By soliciting papers, organizing invited keynote and panel, and facilitating group discussions, this workshop aims to develop a future research agenda for addressing the challenges in LLM evaluation and auditing.